There seems to be a fair bit of chat on the internet at the moment about building web scrapers, most of which use Make and AI. I found this technically interesting, so I thought I would build an automated web scraper in Tape without any external automation tools like Make or Zapier.

I will admit to a couple of things here:

- This was one of those “evening TV is boring” projects, so the level of thought given to the tools used is limited.

- I am slightly unsure about the morality of web scraping, so let’s ignore that for now. (Again, I have not spent much time thinking about it.)

The first thing we need to do is pick a webpage/data source we want. For ease, I picked currency exchange data. I did a quick search for an exchange site and picked the first one in the list (it turns out this site also has an API, so we could do what we are about to through that, but that’s not the point of this exercise).

For this, I just want the following exchange rates:

- US Dollar

- UK Pound

- Euro

- Yen

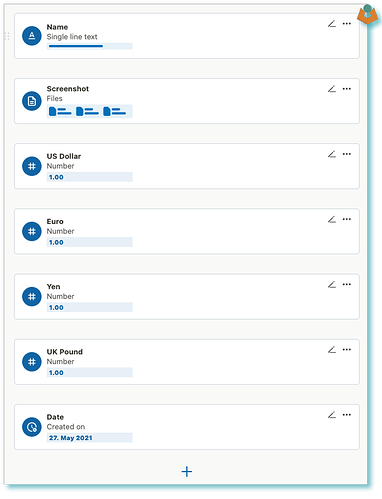

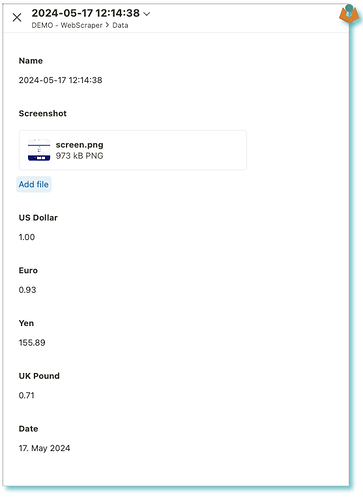

Next, we build a quick app in Tape with fields for the currencies, a name, and the created date.

We can now build the automations.

Automation

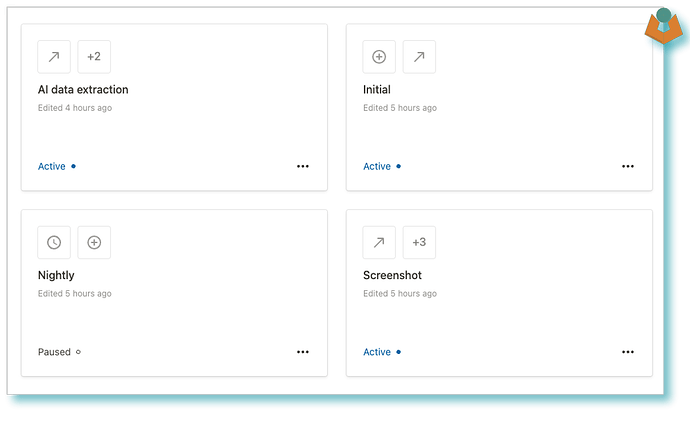

In my example, I’ve used four automations:

- Nightly: all this does is run every morning and create a record, it puts the current date in the name field.

- Initial: this is an on-create trigger, and all it does is trigger the next automation.

- Screenshot: this uses the tool that @1F2Ns mentions here: [✅ Solution] Website screenshot API | Screenshot Machine. We use it to go to the relevant web page and save it as a PNG file on our record. Once it has done that, it triggers our last automation.

- Data extraction: this takes our screenshot and sends it to OpenAI GPT-4o for analysis and data extraction. Once Tape gets the data back, we add it to the relevant fields.

The Details

We will ignore the 1st and 2nd automations and just concentrate on the 3rd and 4th.

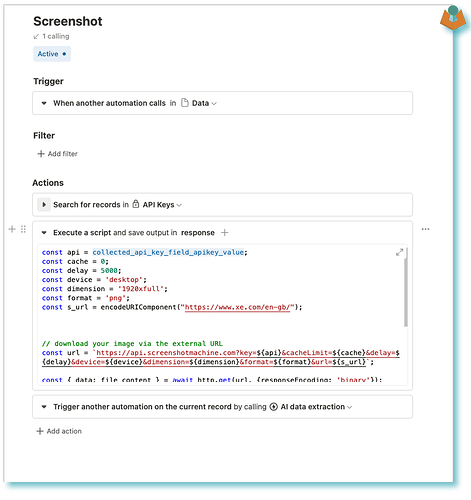

The Screenshot

I use the tool mentioned above, which goes to the specified website and grabs a screenshot. We are then saving this to the Tape record. However, it is worth noting that you should be able to pass the URL straight into your GPT request if you wanted.

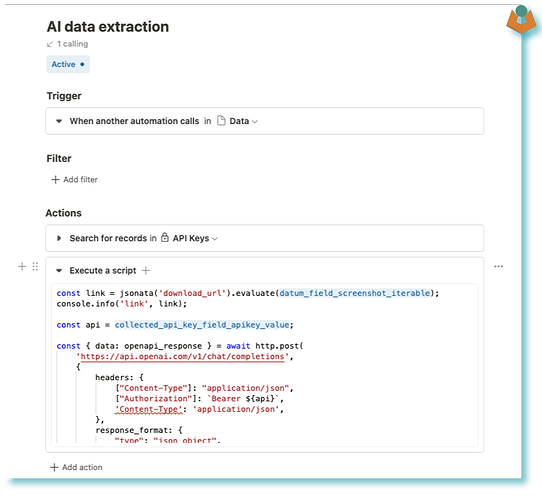

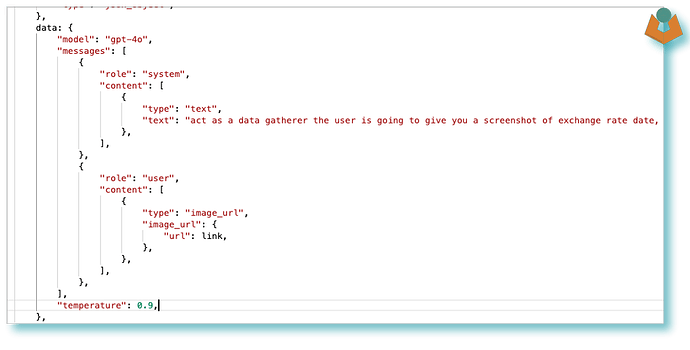

The Data Extraction

As with most things AI, the prompt is key. Also, I find with these newer models, they often seem to try and be too clever, so you can find you need to be even more explicit with what you request.

We write a system prompt asking the AI to extract the currencies we want from the image that will be provided by the user. The response should provide this data in the JSON format given in the example.

In the user prompt, we provide the Tape file download link for our screenshot.

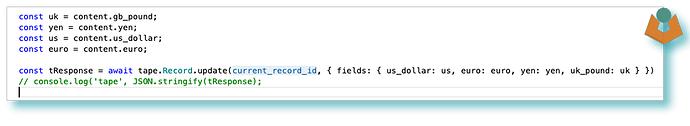

We send it all off to the AI and await the response. It comes back, and we parse it and update the record.

making the updated record look like:

Next Steps

From this point, the way I see it, we could do a few things:

- Build a Tape dashboard displaying the data we are collecting.

- Upload the collected data to something like MongoDB.

- Send an amalgamation of data back up to an AI for trend analysis or similar.