A couple of quick tips:

- OpenAI has just released a new model

gpt-4o-minithis has nearly all of the power of GPT-4o but at a fraction of the cost of GPT-3.5.

To give you an example, getting a summary of an average email would probably use something like 2,603 tokens in and 1,372 out which equates to a cost of:

GPT-4o = $0.033595

GPT-3.5 = $0.0033595

GPT-4o Mini = $0.00121365

I know those costs are so small however if each email is AI Spam checked, categorised, summarised and a draft reply written (if needed) and you then multiply that up by say 1000 emails a month the cost savings for something better start to become more relevant:

GPT-4o = $134.38

GPT-3.5 = $13.438

GPT-4o Mini = $4.8546

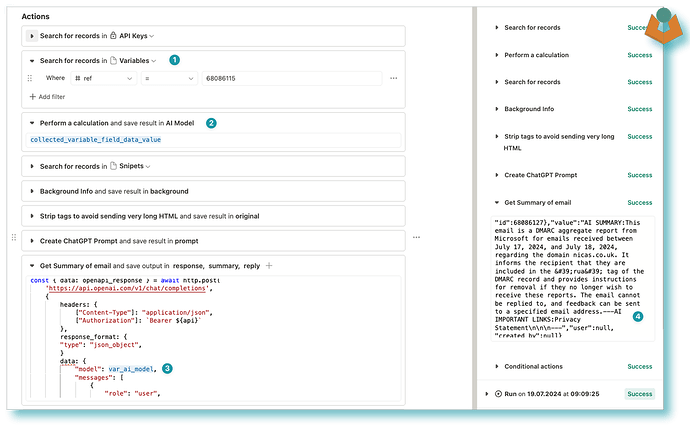

- That brings us to updating your Tape Workflows, if you only have one AI automation then great you go in and change it, if you have 5 it gets more annoying what if you have 50 or more?

I would suggest a ‘variables’ app or whatever you would like to call it, just an app hidden away to hold information that you want to use across multiple workflows:

- Search for the relevant record holding the data you want to pull in

- Add the data to a variable

- Call the variable in your call to OpenAI

- Enjoy the updated model